Search Engine

•

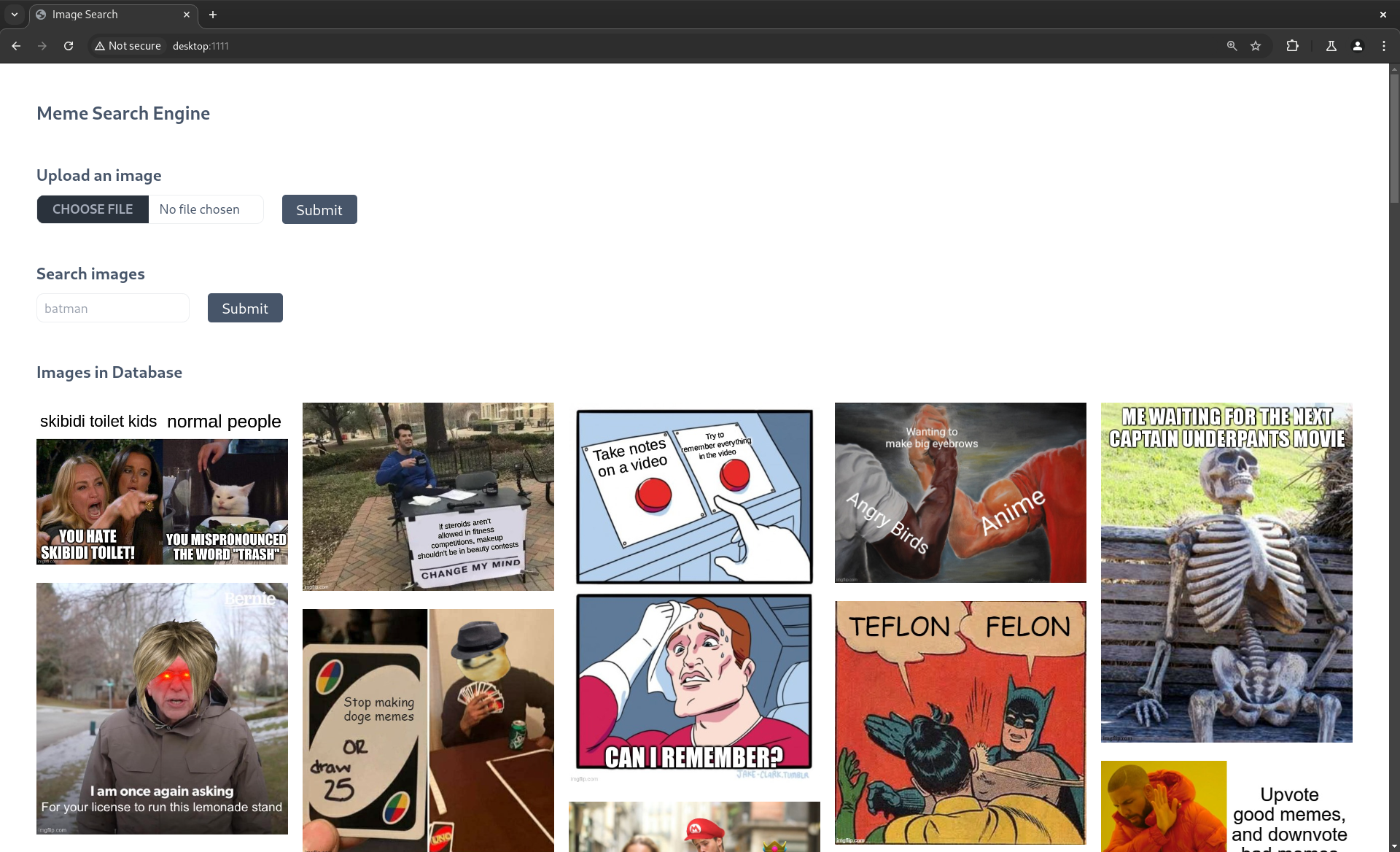

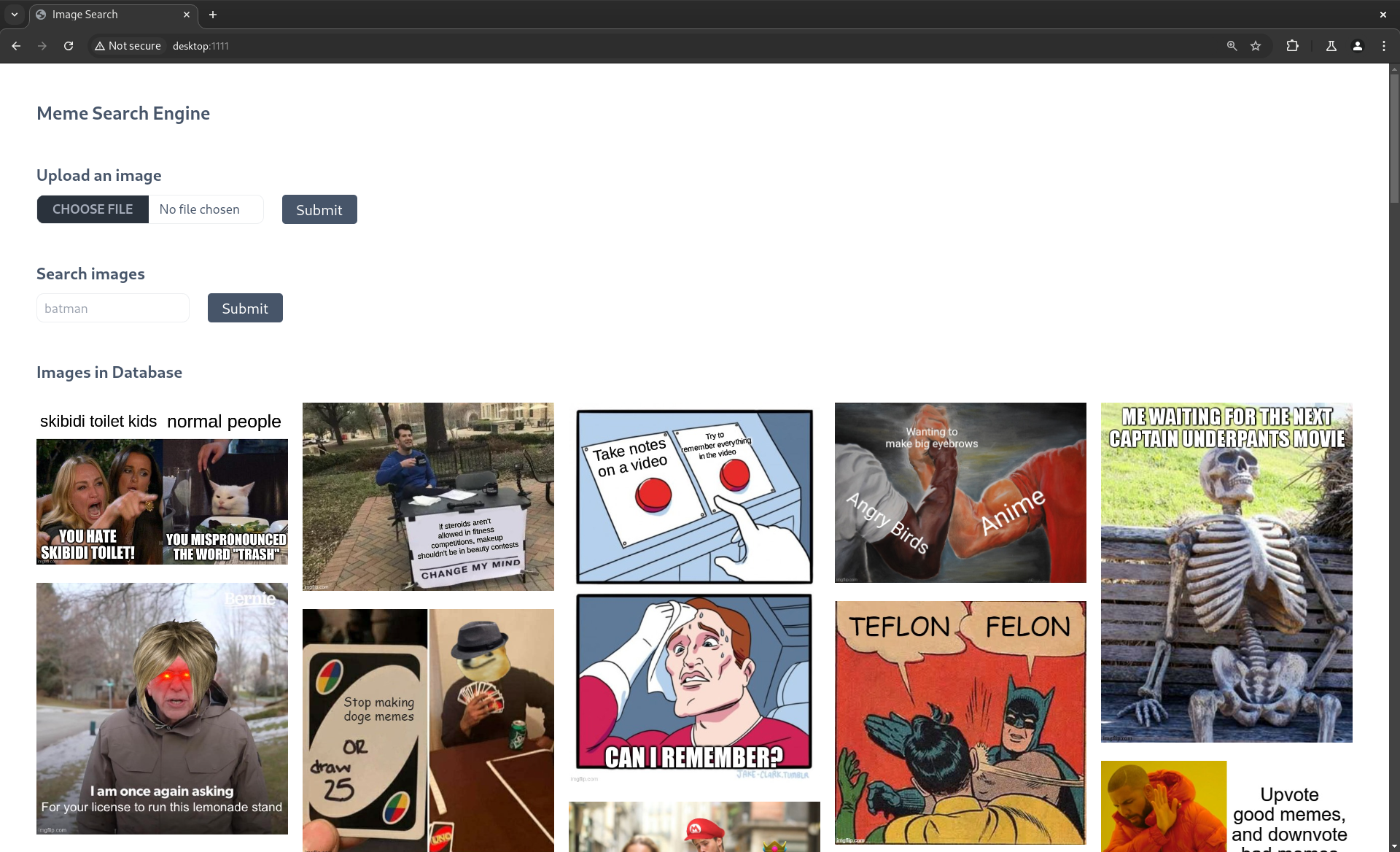

A reverse image search engine for fast meme search and retrieval

Tech stack: Daisy UI, DuckDB, FastAPI, Jinja, Python, PyTorch, and TailwindCSS

Update:

TL;DR: An image search engine powered by ResNet, allowing users to upload an image and instantly retrieve similar matches from its database, leveraging cosine similarity and PCA for efficient feature extraction and rapid search results.

I present you an image search engine capable of extracting image featues with the pre-trained neural network model and retrieving similar images from a dataset of memes.

The UI is made using Jinja2 templates for simplicity and TailwindCSS for quick styling. The backend uses FastAPI as it’s lightweight and easy to use. As for the deep learning framework, I chose PyTorch because its API is easy to use and well-written documentation. Most importantly, many pre-trained models are also installed with it. For the backend database, I chose DuckDB because of its speed and plugins support.

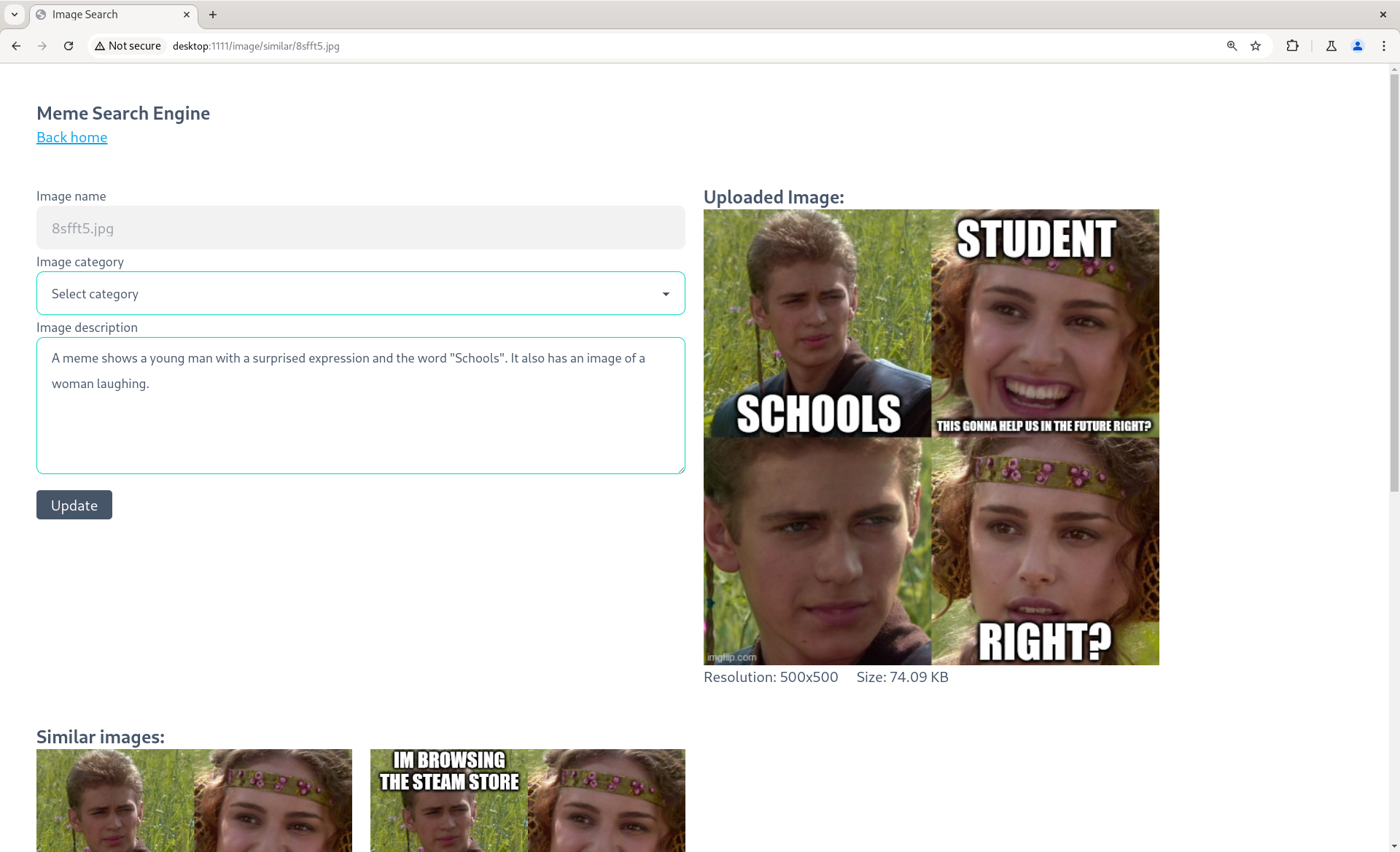

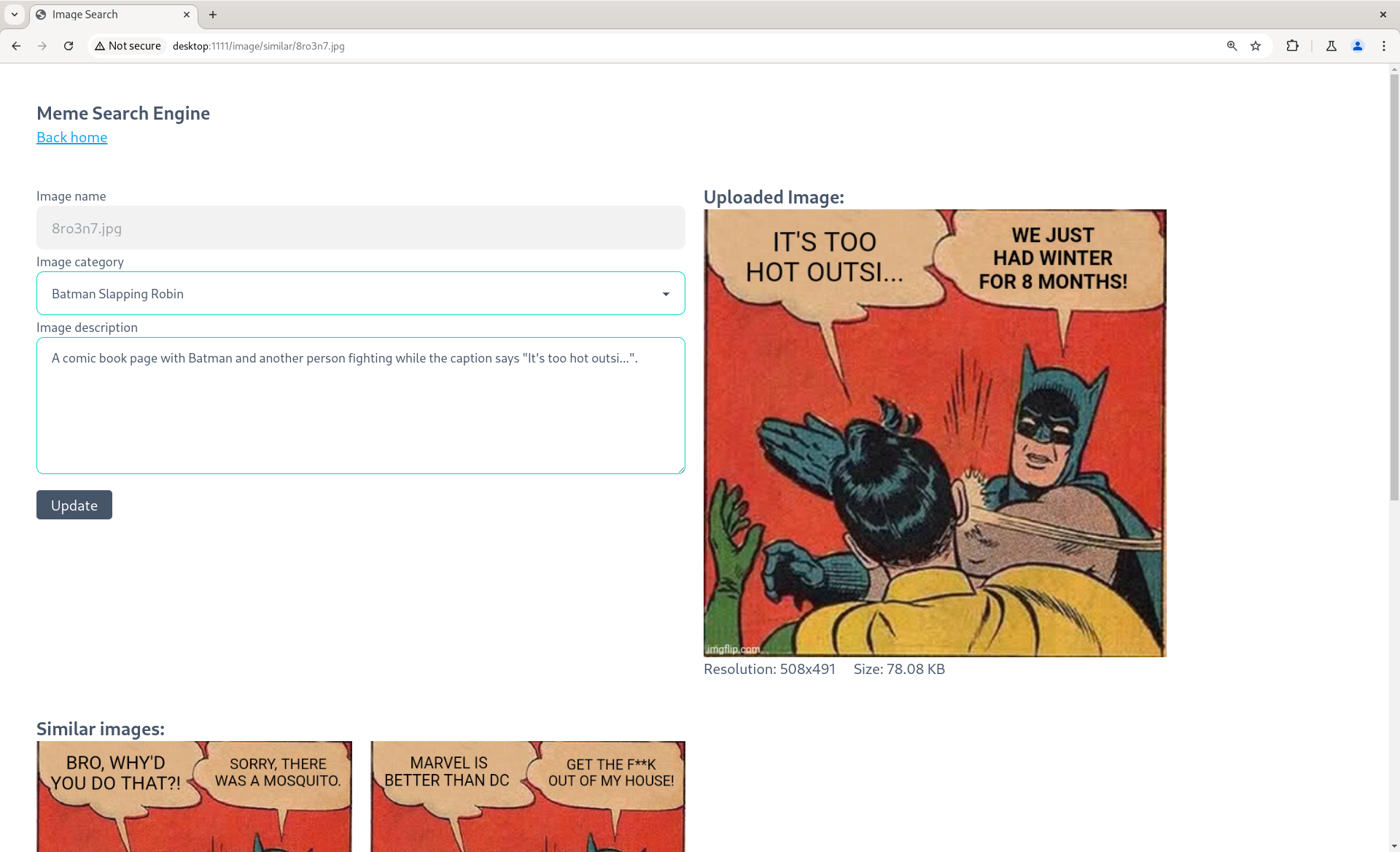

The image search engine allows users to find images in the database using a single image.

Once the query image is uploaded, the system would preprocess the image and extract its

features with a modified ResNet model and represent them in a -dimensional vector.

Finally, the distance between the query image’s feature vector and the feature vectors of all images in the database is calculated using cosine similarity. The distance is represented in a range of to , with indicating not identical and indicating identical.

Every image that gets uploaded to the search engine will be assigned a description using a multi-modal language model before being stored in the database. This allows users to search for images based on their descriptions.

When users input keywords into the search bar, the search engine will turn the keywords into an embedding vector and compare it with the embedding vectors of the image descriptions in the database. The closer the distance between the keyword embedding vector and the image description embedding vector, the more relevant the image is.

I decided to use ResNet50 since it’s capable to capturing

more complex features in the images compared to ResNet18.

However, it requires more memory and computational power.

On the other hand, the search engine will be able

to provide more accurate results.

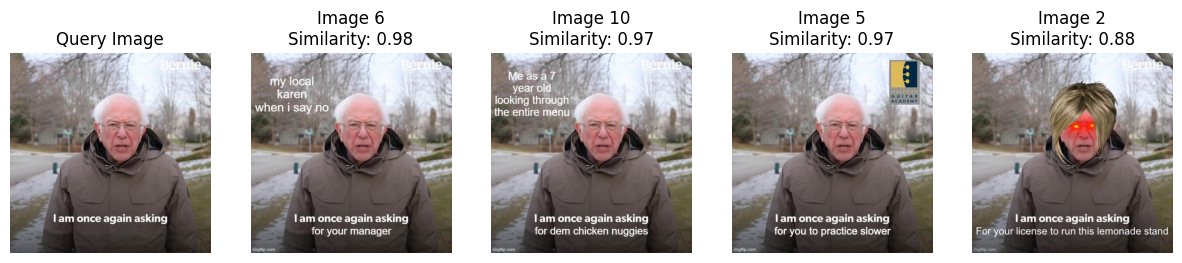

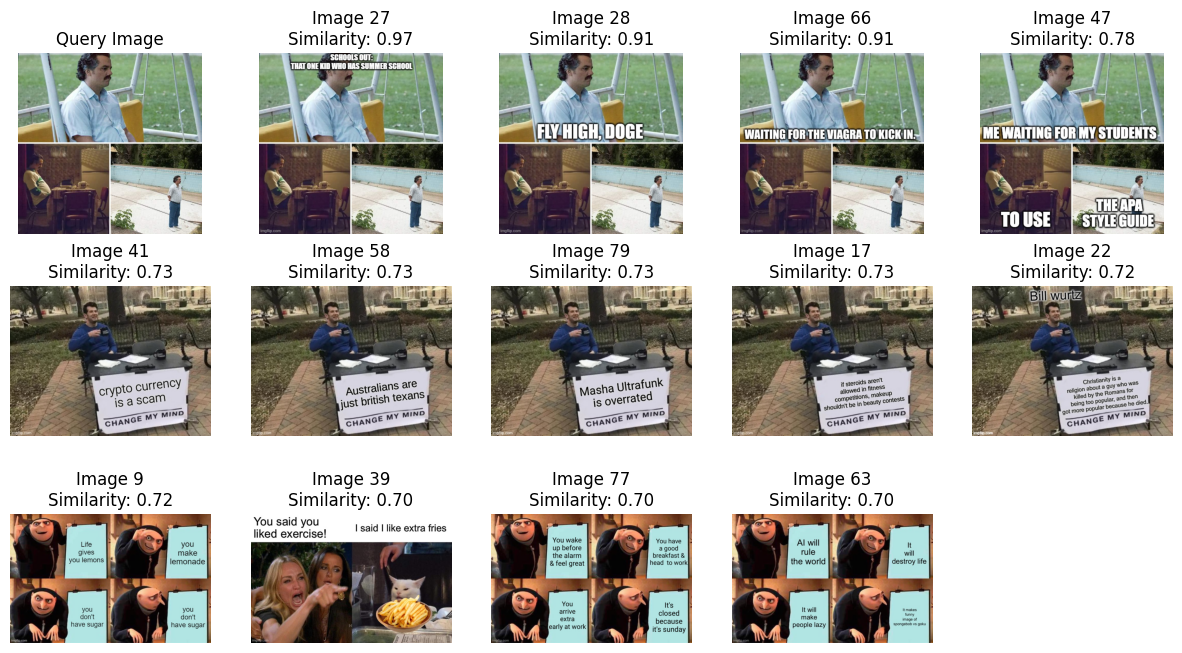

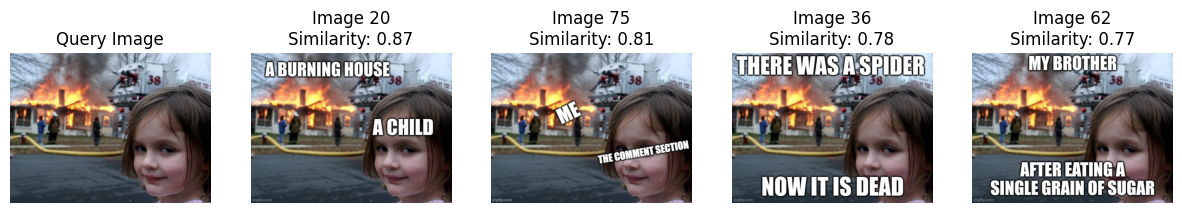

The following figures show the similar images which distances are above from the query image with ResNet18.

With ResNet18 as the feature extraction model, the search engines is more likely to provide

images that have the same feature composition as the query image even though they are completely different.

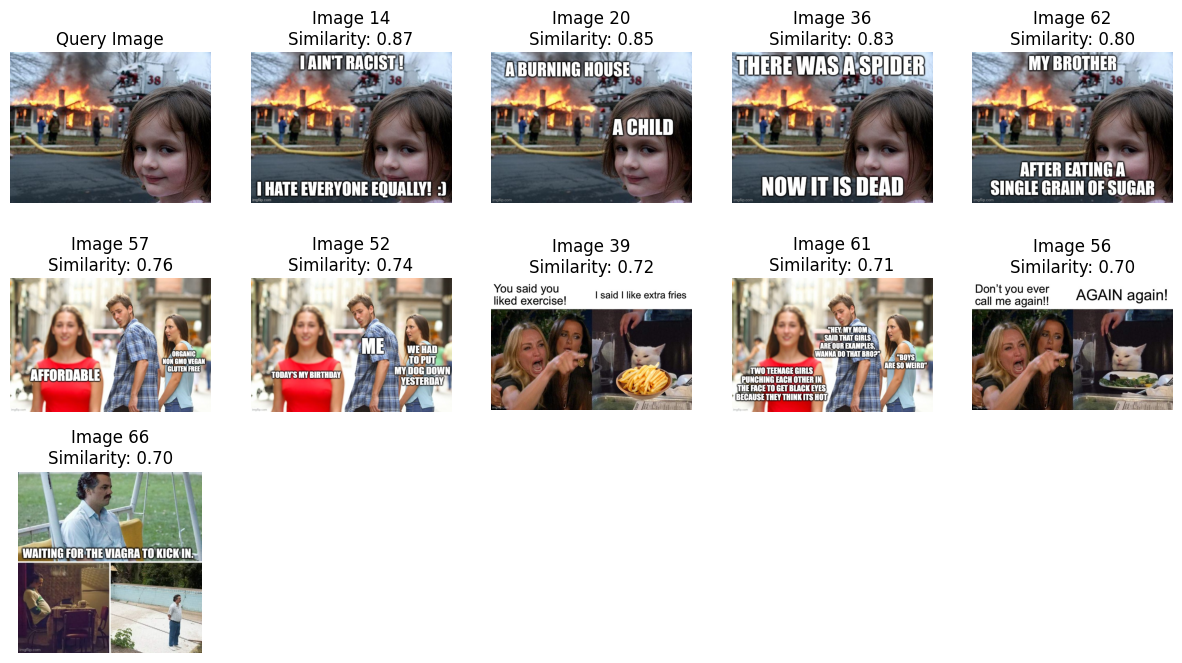

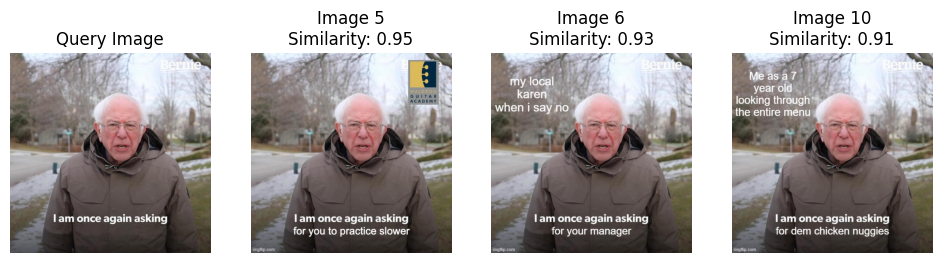

Similarly, the following figures show the similar images which distances are above from the query image with ResNet50.

With ResNet50, those query results appearing above are not shown because ResNet50

is capable of capturing more complex features in images.

However, it requires more memory and computational power.

On the other hand, the search engine will be able

to provide more accurate results.

With Principal Component Analysis (PCA), the dimensionality of the image features can reduced from to . Consequently, the cosine similarity calculation is sped up and the memory usage is reduced.

With dimensions, they can explain of the variance in the original dimensions, and the space required is reduced from bytes to bytes. That’s a of size reduction!

By transitioning from JSON to DuckDB and reducing vector dimensions, the search speed is improved significantly while using less RAM and storage. Storing image feature of images with a dimension of in DuckDb would require MB of space, and the time to search for similar images is seconds.

While storing the image features of the same images with a dimension of in DuckDB would require MB of space, and the time to search for similar images is seconds.