System Architecture

•

A lightweight scheduling system for deep learning models

Schedulearn, stands for Schedule + Learn, is a lightweight scheduling system for deep learning models. It is designed to be a simple and easy-to-use system for scheduling deep learning models so that users can focus on developing their models without constantly worrying about resources.

This project was a thesis project made by someone else and initially made with Kubernetes as the orchestrator, Go as the backend language, and MongoDB as the database. Looking at the codebase, it occurred to me that the system was made in hurry, too complex for a prototype scheduling system, and resource hungry. Running the MongoDB itself would require 200+ MB of RAM. Overall, the entire system required 500+ MB of RAM. Even though the backend system was written in Go, there were too many redundancy and unnecessary code, leading to poor code quality, maintainability, and performance.

At the end, I decided to rewrite the entire project using Docker, Python, and SQLite. Even though the project was written in Python, the entire system only requires 100+ MB of RAM. The SQLite DB file containing all training metadata only requires 100kb of storage. The project resulted in a dramatically smaller codebase, reduced to just 25% of its original size. I also achieved a 80% reduction in resource requirements.

The following is the list of requirements that the system should meet. The system should be able to:

The system is built using the following technologies:

In the figure above, you can see that there are three main components in the system: the API, the servers, the user interface. Those API endpoints are responsible for handling users requests from the user interface, such as creating, updating, and deleting models. The database is responsible for storing the models’ metadata. The scheduler, which is built in the same place as the API, is the core of the system and is responsible for scheduling the models depending on the resources available in the cluster. Meaning, the system will tell where each model should be trained at and how much resources each model should use.

When a job is submitted, that particular job will undergo several steps before its results are being sent back to the user.

The system consists of three servers, and each server consists of 4 GPUs. The following of the specifications of the entire system:

There are three scheduling algorithms exist within the system:

These algorithms are not available to use out of the box, so that I have to customize them to fit the system’s requirements. I can’t find a way to express the pseudocode in Markdown, so I will use Python instead. Basically, the algorithms you will see in the following sections are much simpler than the actual implementation.

def fifo(required_gpus: int) -> dict | None: for server in ['gpu3', 'gpu4', 'gpu5']: gpus = get_gpus() # get all available GPUs in the cluster available_gpus = ... # include only GPUs with less 90% VRAM usage if len(available) >= required_gpus: result = {'server': server, 'gpus': []} for gpu in available[:required_gpus]: result['gpus'].append(gpu.id) return result return NoneWhat the algorithm does is that it will check each server’s availability by cheking through each GPU in the server. If the GPU has less 90% VRAM usage, then it will be included in the list of available GPUs.

Once the list of available GPUs is ready, the algorithm will check if the number of available GPUs is equal or greater than the number of GPUs required by the job. If yes, then the algorithm will return the server and the available GPUs in that server. Else, continue.

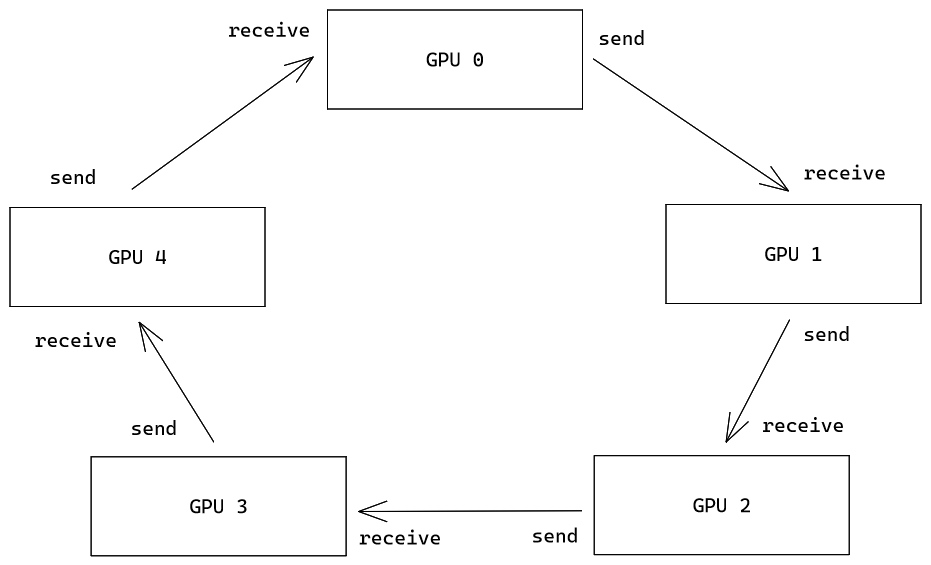

To implement Elastic FIFO, I still have to use the same FIFO algorithm that you saw above. To make it possible, my system should be able to scale out jobs, as well as move jobs from one server to another on the fly.

Whenever a job completes in the system, the scheduler will determine which the slowest job that is still running. If there is, then the system will verify how long the job has been running. Knowing that it’s running too long, the system will scale out the job by adding more GPUs to the job, or possible migrate the job to another server with more GPUs.

However, there are some drawbacks from killing, looking for available resources, migrating, and restarting the job. The drawbacks are:

Ideally, it’s better to scale out only important jobs with most weights with least resources. However, it’s not possible to know which jobs are important since the system does not know the model’s architecture, and I did not implement any metrics to measure the importance of a job. Therefore, I decided to scale out all jobs that are running too long. Jobs running too long are more likely to be failed or stuck jobs in my case.

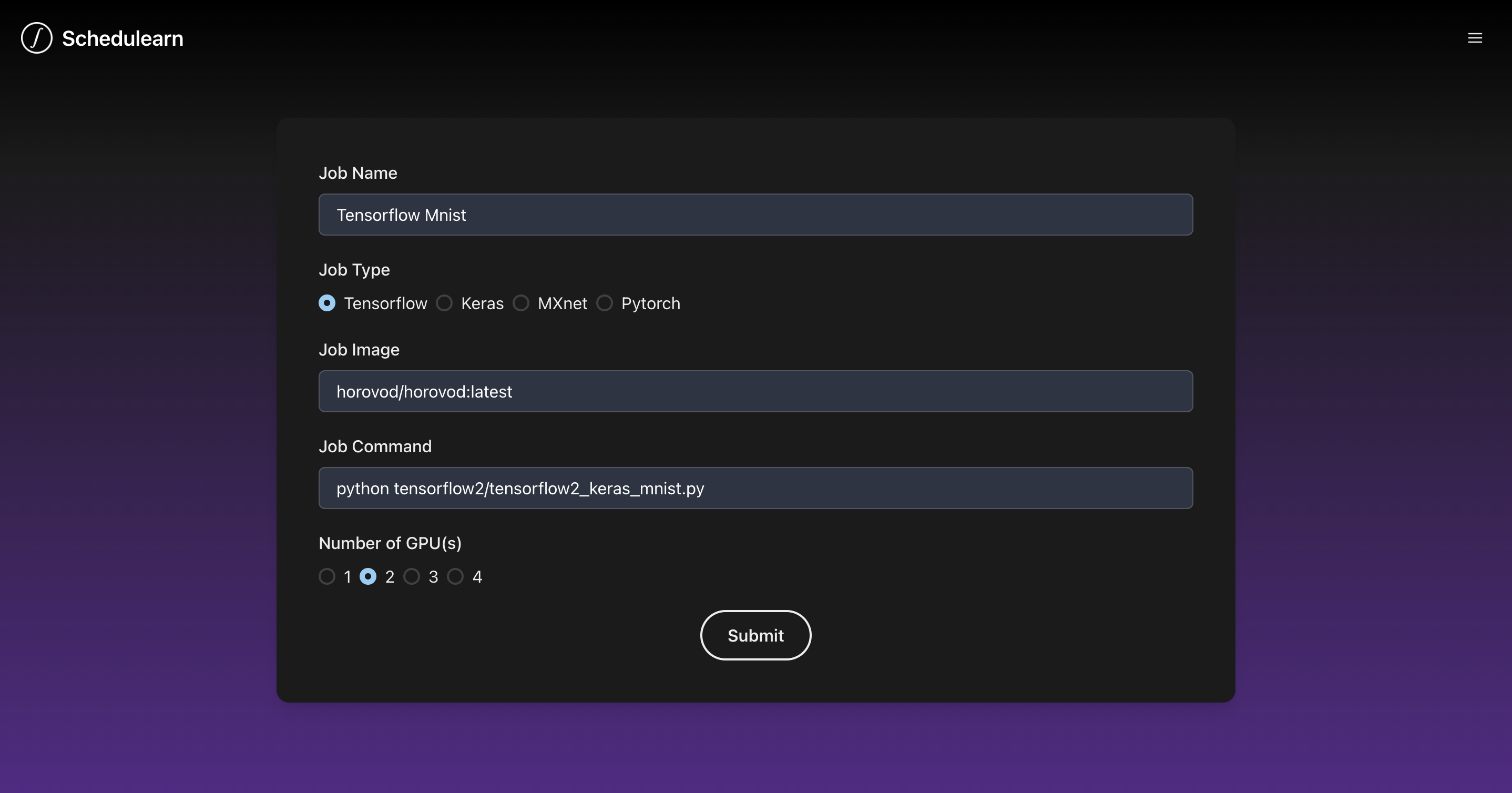

Job submission can be done on the user interface, or by sending a POST request to the API. The following is an example of a job submission request:

import requests

r = requests.post( 'http://localhost:5000/jobs', json = { "name": "Pytorch Mnist", "type": "Pytorch", "container_image": "nathansetyawan96/horovod", "command": "python pytorch/pytorch_mnist.py", "required_gpus": 4, })Once the job is accepted, then you would see a response like the following.

HTTP/1.1 201 Createdcontent-length: 38content-type: application/jsondate: Wed, 09 Nov 2022 15:03:41 GMTserver: uvicorn

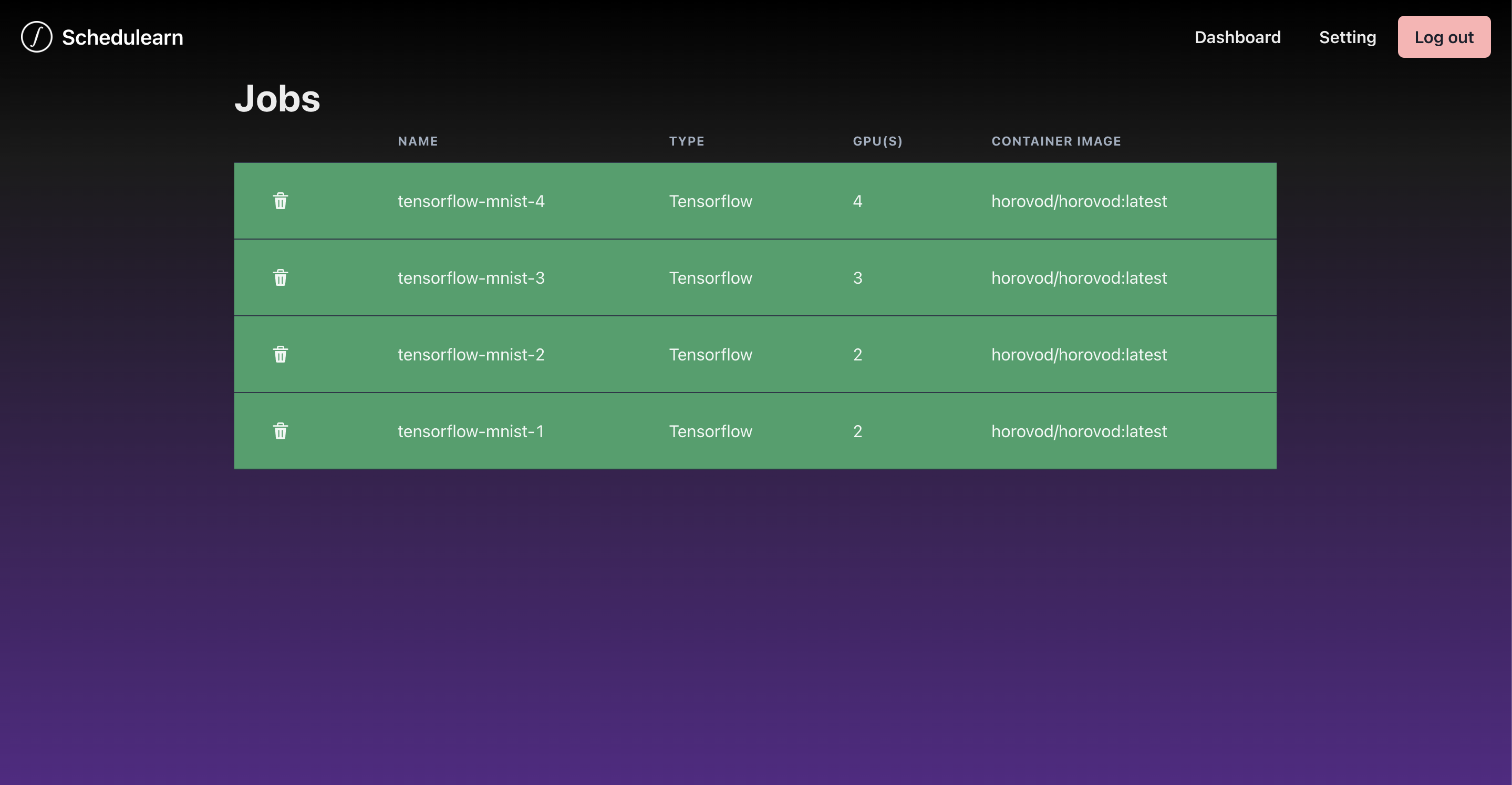

{ "message": "Job created successfully"}I also made a user interface to show all jobs in the system, as well as their status. Knowing the status of each job, whether the job is running, completed, or failed, would make debugging much easier.

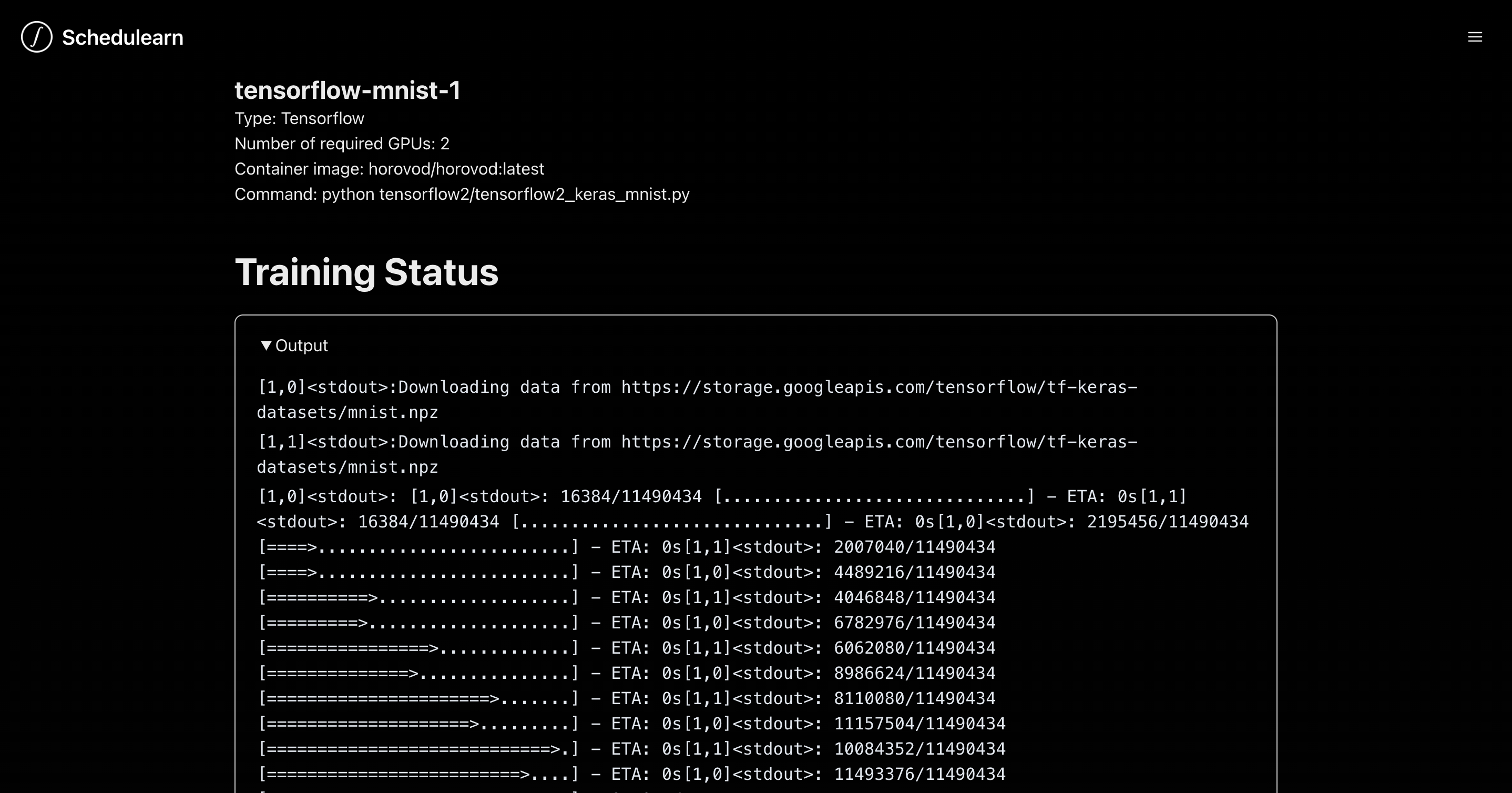

The job output running in the system can be streamed to the user interface. If a job was stuck, and it would easier to just check the output of the job on the UI rather than SSH-ing to the server and checking the output of the job’s docker.

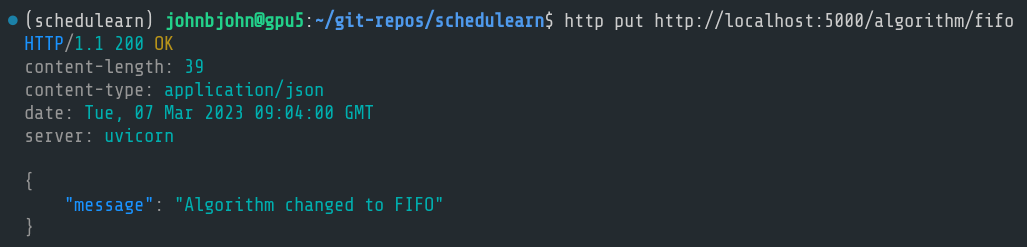

Changing the scheduling algorithm can be done by sending a PUT request to the API.

http PUT localhost:5000/algorithm/elasticfifo

Changing it back to FIFO can be done by sending another PUT request to the API.

http PUT localhost:5000/algorithm/fifo

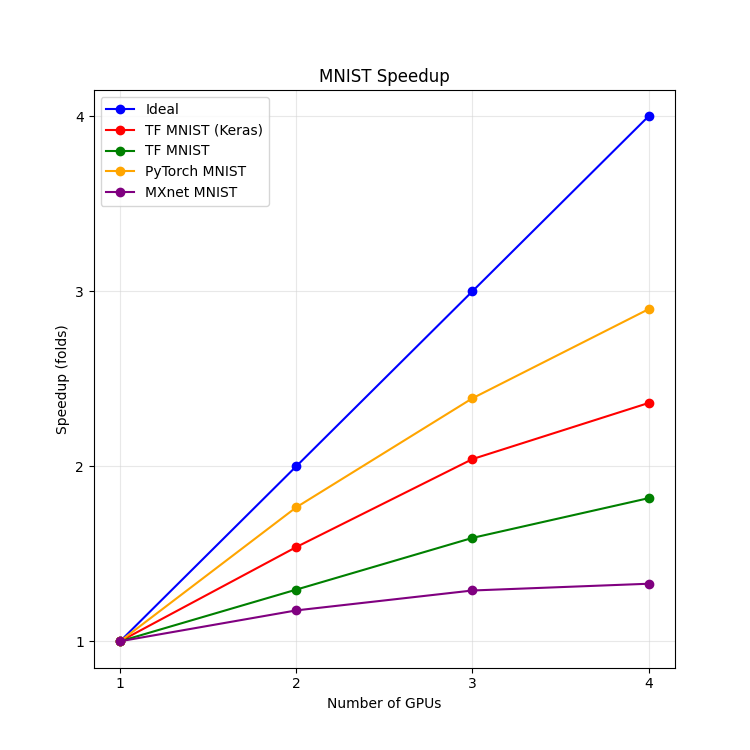

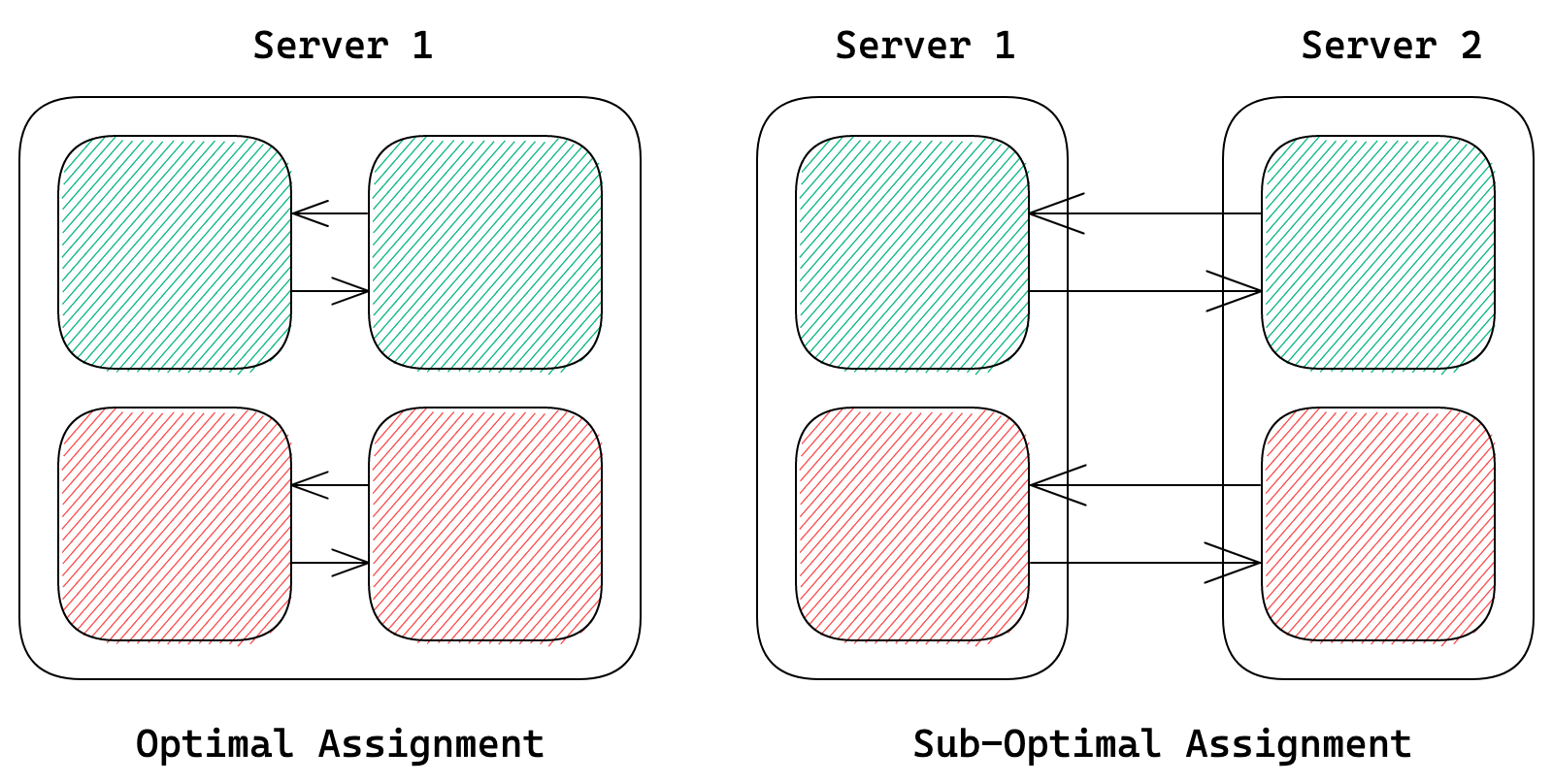

Due security measures imposed by the lab that I worked for, I am unable to train models with multiple GPUs from different servers e.g. 4 GPUs in server A and 2 GPUs in server B. However, assigning more GPUs does not translate to 1 to 1 performance increase. Meaning that training a model with 4 GPUs does not mean that the model will be trained 4 times faster, assuming that 1 GPU is 1x speed.

To overcome this issue, each job will only be assigned to GPUs from the same server. At that moment, I saw that is the feasible way to reduce communication costs.

Having multiple GPUs requires the data to travel more, which already imposes a significant overhead. The further the data travels, the more overhead it will impose. Therefore, it’s better to asssign multiple GPUs from the same server for a certain job.

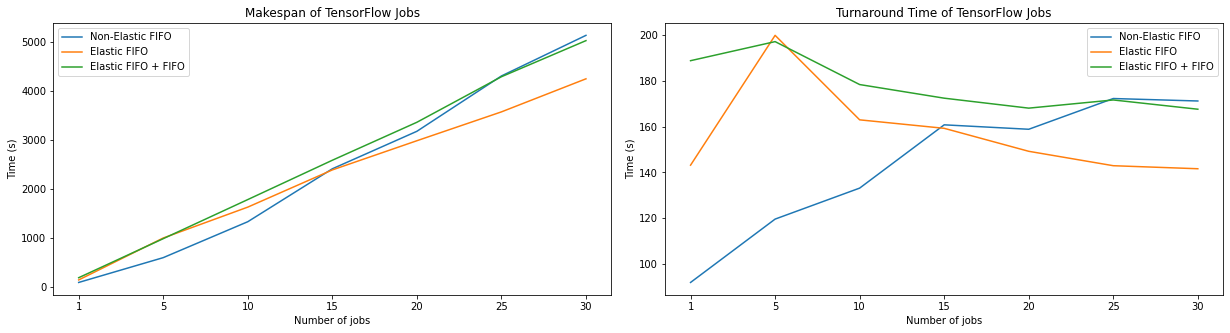

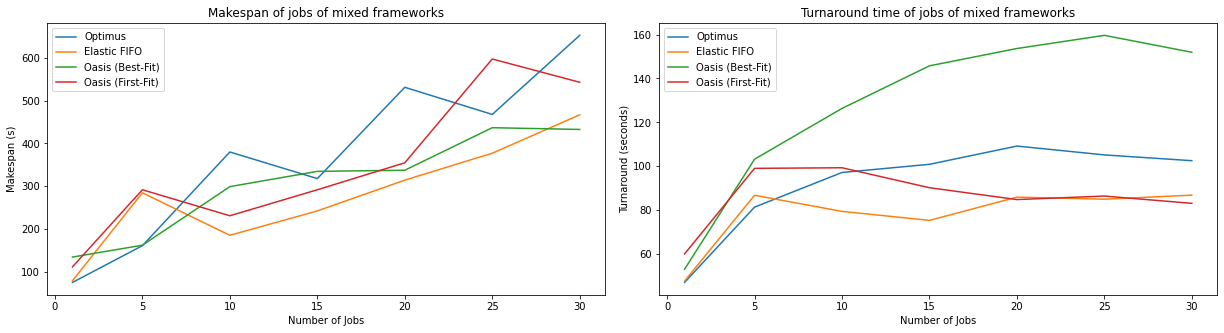

From the figure above, you would notice that the makespan of TensorFlow jobs was reduced by almost 10% with Elastic FIFO. The turnaround time of TensorFlow jobs was also reduced by about 50% with Elastic FIFO.

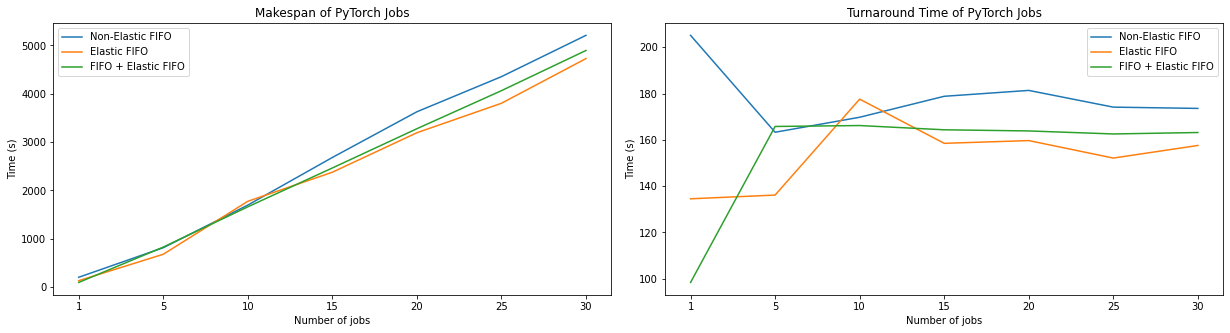

Unlike TensorFlow, PyTorch jobs did not benefit from Elastic FIFO. The makespan was reduced by about 5% with Elastic FIFO, while the turnaround time was reduced by about 10%.

This project was no easy task. To be able to make one, one should be familiar with the basics of Docker, system design of a backend, and the internals of Horovod. I had to learn all of them from scratch, and I am glad that I did it.

Before you go, here are some takeaways from this project: