Neural Networks: 6 Part(s)

Introduction

Recurrent Neural Network (RNN) is a type of neural network that is designed to deal with sequential data. However, traditional RNNs have a limited short-term memory and struggle to retain information over longer sequences. Not only that, but they also suffer from the vanishing gradient problem. In other words, the gradients would become too small and unable to update weights during backpropagation effectively.

In this post, we are going to discuss Long Short-Term Memory (LSTM). It is a special type of RNN that can address the aforementioned problems. They were introduced by Sepp Hochreiter and Jürgen Schmidhuber in 1997 1. Since LSTM can retain information for a certain time, they are widely used in applications like speech recognition, language modeling, and stock prediction.

In this post, we are going to predict stock prices using LSTM networks using Keras 2.

Data Preparation

Download the NVIDIA stock price dataset from Yahoo! Finance. After that, load the csv file into your Jupyter Notebook and convert the date column to datetime.

nvidia_df = pd.read_csv('data/NVDA.csv')

nvidia_df['Date'] = pd.to_datetime(nvidia_df['Date'])

nvidia_df.head()Once the CSV file is loaded, scale the data using the MinMaxScaler. Scaling the data brings all features to the same range, which helps the model to learn better. Also prevent dominance of one feature over the other.

scaler = MinMaxScaler()

scaled_data = scaler.fit_transform(nvidia_df)After scaling the data, split the dataset into sequences for the LSTM model.

def generate_sequences(dataset, length):

X = np.array([dataset[i:i+length] for i in range(len(dataset) - length)])

y = np.array([dataset[i+length] for i in range(len(dataset) - length)])

return X, y

sequence_length = 10

X, y = generate_sequences(scaled_data, sequence_length)What the function does above is that splitting the dataset into sequences of 10 like a sliding window. Thus, LSTM has the ability to retain the previous information.

Finally, split the dataset into training and testing sets.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, shuffle=False)Model Building

We are going to build an LSTM model using the Keras library. The model will consists of 3 layers of LSTM, and each of them will have 50 units.

model = Sequential([

LSTM(

units=50,

return_sequences=True,

input_shape=(X_train.shape[0], X_train.shape[2])

),

Dropout(0.2),

LSTM(

units=50,

return_sequences=True

),

Dropout(0.2),

LSTM(units=50),

Dropout(0.2),

Dense(units=1, activation='relu')

])

model.compile(optimizer='adam', loss='mean_squared_error')We could add the number of LSTM layers. However, our model would catch all of the noises in the dataset. In otherwords, our model is overfitting and it would be good at predicting future values. Besides that, our dataset is small. Therefore, having more than 3 LSTM layers would not be beneficial.

Model Training

We are going to train the model using the scaled data for 100 times.

model.fit(X_train, y_train, epochs=100, batch_size=32, verbose=0)

loss = model.evaluate(X_test, y_test)

predictions = model.predict(X_test)Conclusion

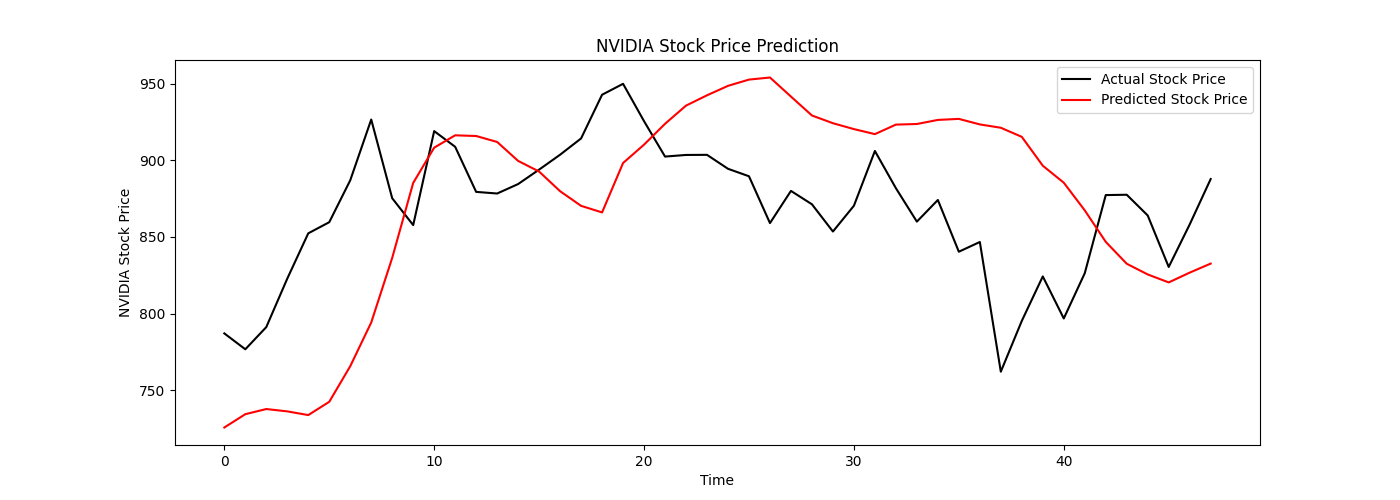

Nvidia stock price truth vs prediction values

Nvidia stock price truth vs prediction values

From the graph above, we can see that the model is able to somehow catch the trend of the stock price, eventhough it is not perfect. Given that the dataset is small and the information is limited, the model is able to predict the stock price to some extent.

References

- Christopher Olah. Understanding LSTM Networks. https://colah.github.io/posts/2015-08-Understanding-LSTMs/.

Footnotes

-

Sepp Hochreiter and Jürgen Schmidhuber. Long Short-Term Memory ↩