RMSprop with momentum

Adaptive Moment Estimation (Adam) is an optimization algorithm that is inspired from Adagrad and RMSprop optimization algorithms. Remember that Adagrad and RMSprop have their own limitations.

In the case of Adagrad, the learning rate diminishes over time and becomes too small since the algorithm takes account all of the previous gradients. Thus making the model stops learning.

Even though RMSprop solves the problem of Adagrad by taking account only the average of the previous gradients, it still stuffers from the same problem as Adagrad.

To solve the limitations of Adagrad and RMSprop, Adam introduces the concept of momentum. Let’s see how Adam works.

The parameter update rule is expressed as

where

The corrected first moment estimate is expressed as

where

The corrected second moment estimate is expressed as

where

It’s worth mentioning is that the first moment estimate and the second moment estimate work just like Momentum to maintain directionality since they both take account of the previous gradients. By accumulating previous gradients, Adam accelerates convergence especially in the area with small gradients.

The reason why the first moment estimate has to be corrected is that the first moment estimate is biased to smaller values at the beginning of the training when it is zero or close to zero. The bias could lead to overly aggresive parameter updates, instability, or slow convergence. Similarly with the second moment estimate .

Since we only have two parameters, we are going to need to represent the gradient of the cost function with respect to the intercept, and to represent the gradient of the cost function with respect to the coefficient. These two can be expressed as follows:

First, calculate the intercept and the coefficient gradient. Notice that the intercept gradient is the prediction error.

error = prediction - y[random_index]intercept_gradient = errorcoefficient_gradient = error * x[random_index]Second, calculate the first moment estimate and the second moment estimate .

momentum = momentum_decay_rate * momentum + (1 - momentum_decay_rate) * intercept_gradientvariance = variance_decay_rate * variance + (1 - variance_decay_rate) * (coefficient_gradient ** 2)Third, correct the first moment estimate and the second moment estimate .

corrected_momentum = momentum / (1 - momentum_decay_rate ** epoch)corrected_variance = variance / (1 - variance_decay_rate ** epoch)Finally, update the intercept and the coefficient.

intercept = intercept - learning_rate * corrected_momentum / (np.sqrt(corrected_variance) + eps)coefficient = coefficient - learning_rate * corrected_momentum / (np.sqrt(corrected_variance) + eps)

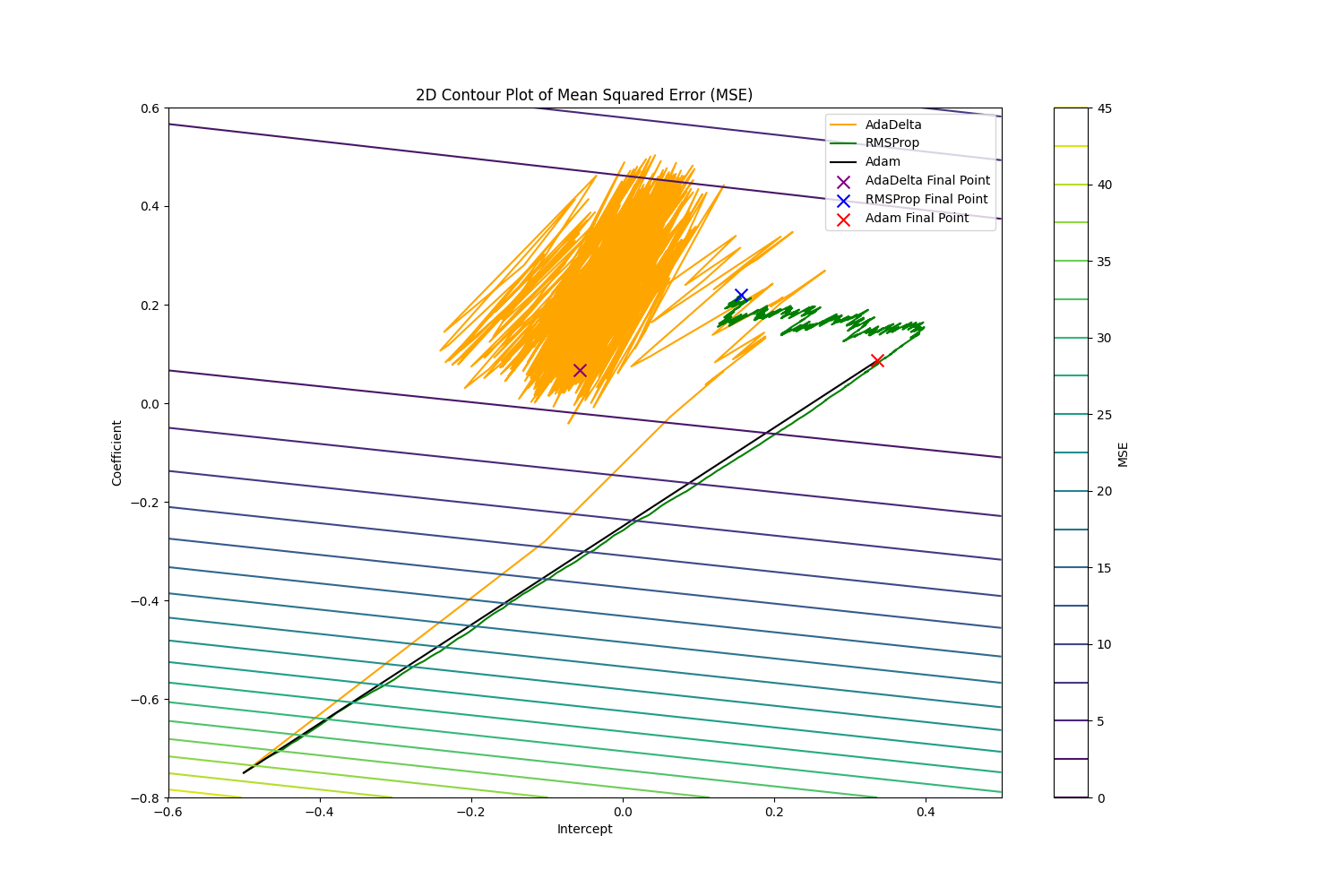

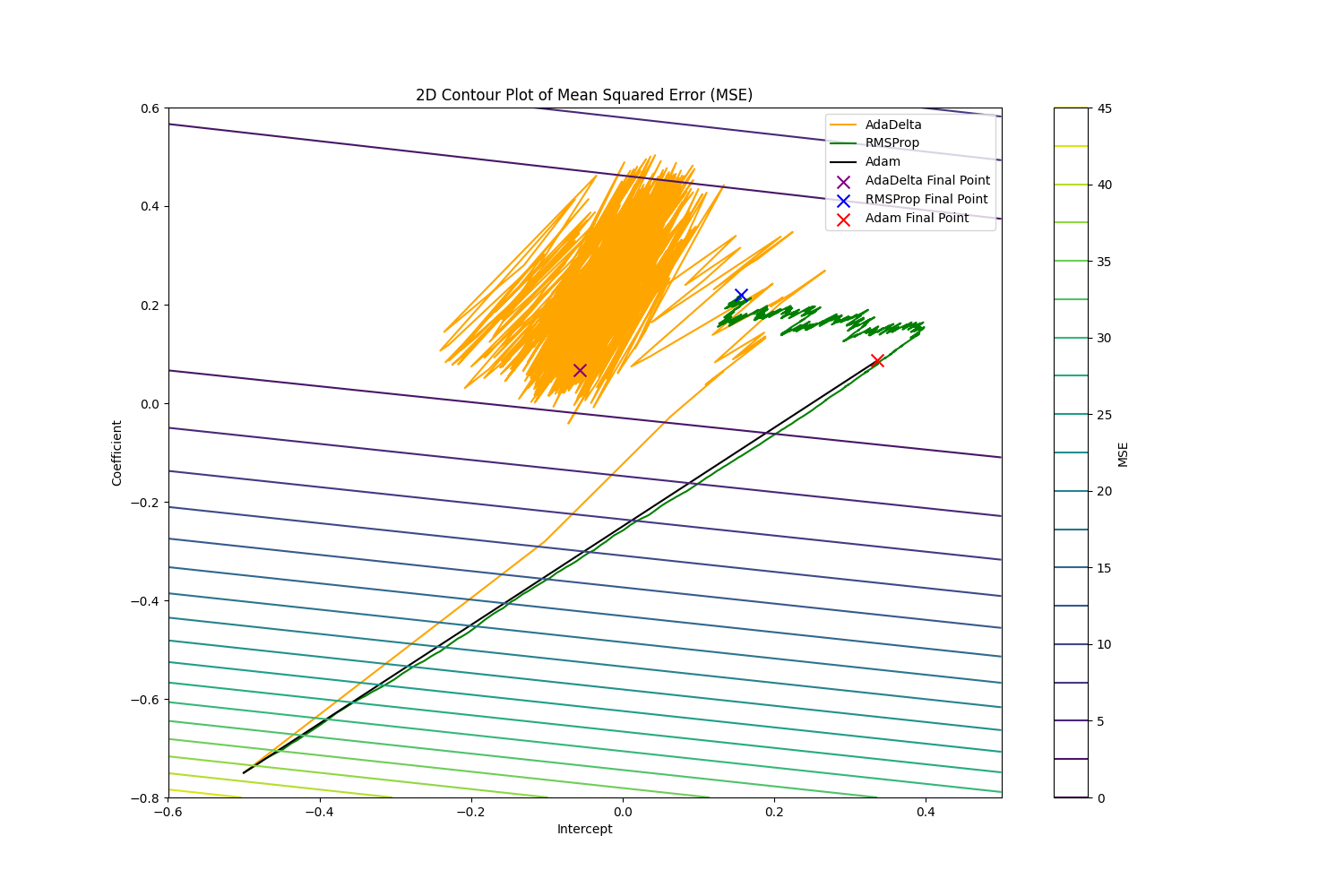

From the figure above, we can see that the pathway Adam took a direct path down the hill compared to the other two. That proves that Adam can accelerate convergence especially in the area with small gradients and avoid frequent updates with the help of Momentum.

def adam(x, y, df, epochs=1000, learning_rate=0.01, eps=1e-8): intercept, coefficient = -0.5, -0.75 momentum_decay_rate, variance_decay_rate = 0.9, 0.999 momentum, variance = 0.0, 0.0

random_index = np.random.randint(len(x)) prediction = predict(intercept, coefficient, x[random_index]) error = prediction - y[random_index] df.loc[0] = [intercept, coefficient, momentum, variance, (error ** 2) / 2]

for epoch in range(1, epochs + 1): random_index = np.random.randint(len(x)) prediction = predict(intercept, coefficient, x[random_index]) error = prediction - y[random_index]

intercept_gradient = error coefficient_gradient = error * x[random_index]

momentum = momentum_decay_rate * momentum + (1 - momentum_decay_rate) * intercept_gradient variance = variance_decay_rate * variance + (1 - variance_decay_rate) * (coefficient_gradient ** 2)

corrected_momentum = momentum / (1 - momentum_decay_rate ** epoch) corrected_variance = variance / (1 - variance_decay_rate ** epoch)

intercept = intercept - learning_rate * corrected_momentum / (np.sqrt(corrected_variance) + eps) coefficient = coefficient - learning_rate * corrected_momentum / (np.sqrt(corrected_variance) + eps)

df.loc[epoch] = [intercept, coefficient, corrected_momentum, corrected_variance, (error ** 2) / 2]

return df