Minimizing cost functions with a subset of the dataset

In the Mathematics of Gradient Descent, we have discussed what Gradient Descent is, how it works, and how to derive the equations needed to update the parameters of the model.

In this post, we are going to write Batch Gradient Descent from scratch in Python.

Throughout this series, we are going to use the Iris Dataset from UCI Machine Learning Repository imported from scikit-learn.

There are two features in the dataset that we are going to analyse, namely sepal_length and petal_width shown in the highlighted lines.

from sklearn.datasets import load_iris

iris = datasets.load_iris()features = iris.datatarget = iris.target

sepal_length = np.array(features[:,0])petal_width = np.array(features[:,3])

species_map = {0: 'setosa', 1: 'versicolor', 2: 'virginica'}species_names = [species_map[i] for i in target] Before we implement Batch Gradient Descent in Python, we need to set a baseline to compare against our own implementation.

So, we are going to train our dataset into the Linear Regression built-in function made by scikit-learn.

First, let’s fit our dataset to LinearRegression() model that we imported from sklearn.linear_model.

linreg = LinearRegression()

linreg.fit( X = sepal_length.reshape(-1,1), y = petal_width.reshape(-1,1))

print("Intercept: ",linreg.intercept_[0])# Intercept: -3.200215print("First coefficient:", linreg.coef_[0][0])# First coeficient: 0.75291757Once we have the intercept and the coefficient values, let’s make a regression line to see if the line is close to most data points.

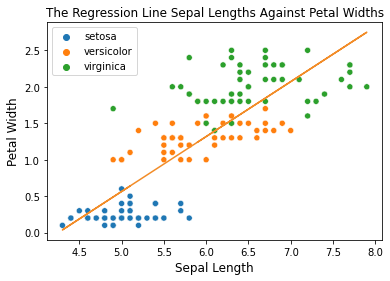

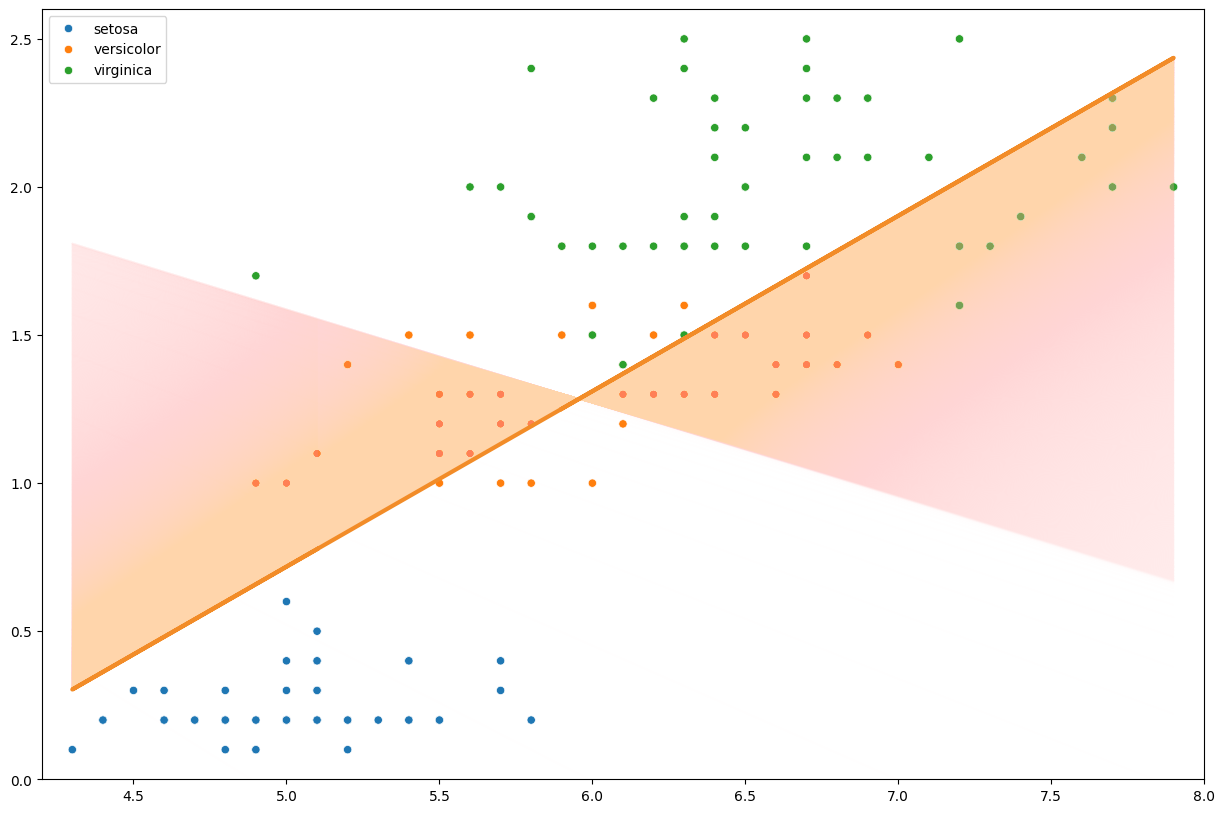

sns.scatterplot( x = sepal_length, y = petal_width, hue = species_names)

plt.plot( sepal_length, linreg.intercept_[0] + linreg.coef_[0][0] * features[:, 0], color='red')

Clearly, the line is indeed very close to the most data points and we want to see the MSE of this regression line.

linreg_predictions = linreg.predict(sepal_length.reshape(-1,1))linreg_mse = mean_squared_error(linreg_predictions, petal_width)print(f"The MSE is {linreg_mse}")# The MSE is 0.19101500769427357 From the result we got from sklearn, the best regression line is

with MSE value around . The equation above is going to be our base line for this experiment to determine how good our own Gradient Descent implementation.

The parameter update rule is expressed as

where

The gradient of the cost function w.r.t. to the intercept and the coefficient are expresed as the following.

For more details, please refer to the Mathematics of Gradient Descent post.

First, define the prediction function.

def predict(intercept, coefficient, dataset): return intercept + coefficient * xSecond, determine the prediction error and the gradient of the cost function w.r.t the intercept and the coefficient .

length = len(x)error = prediction - y

intercept_gradient = np.sum(error) / lengthcoefficient_gradient = np.sum(error * x) / lengthLastly, update the intercept and the coefficient .

intercept = intercept - alpha * intercept_gradientcoefficient = coefficient - alpha * coefficient_gradient

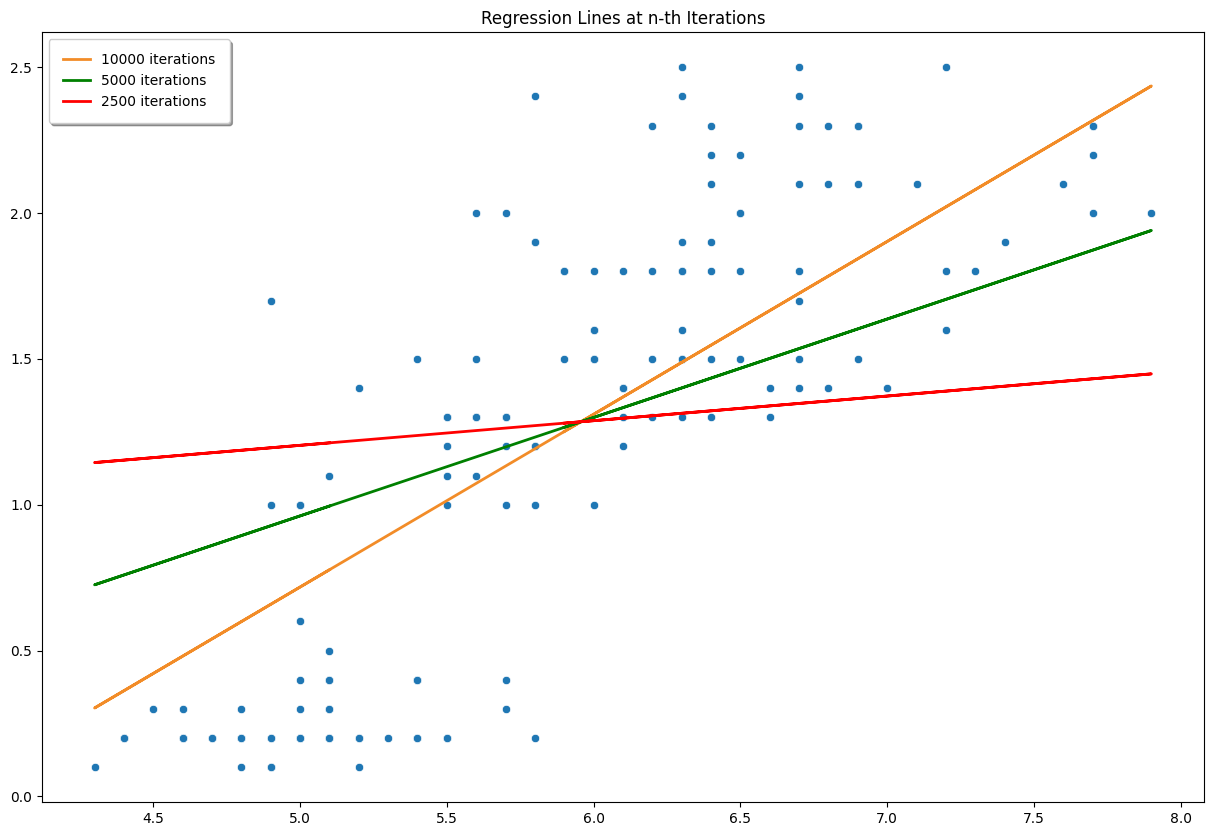

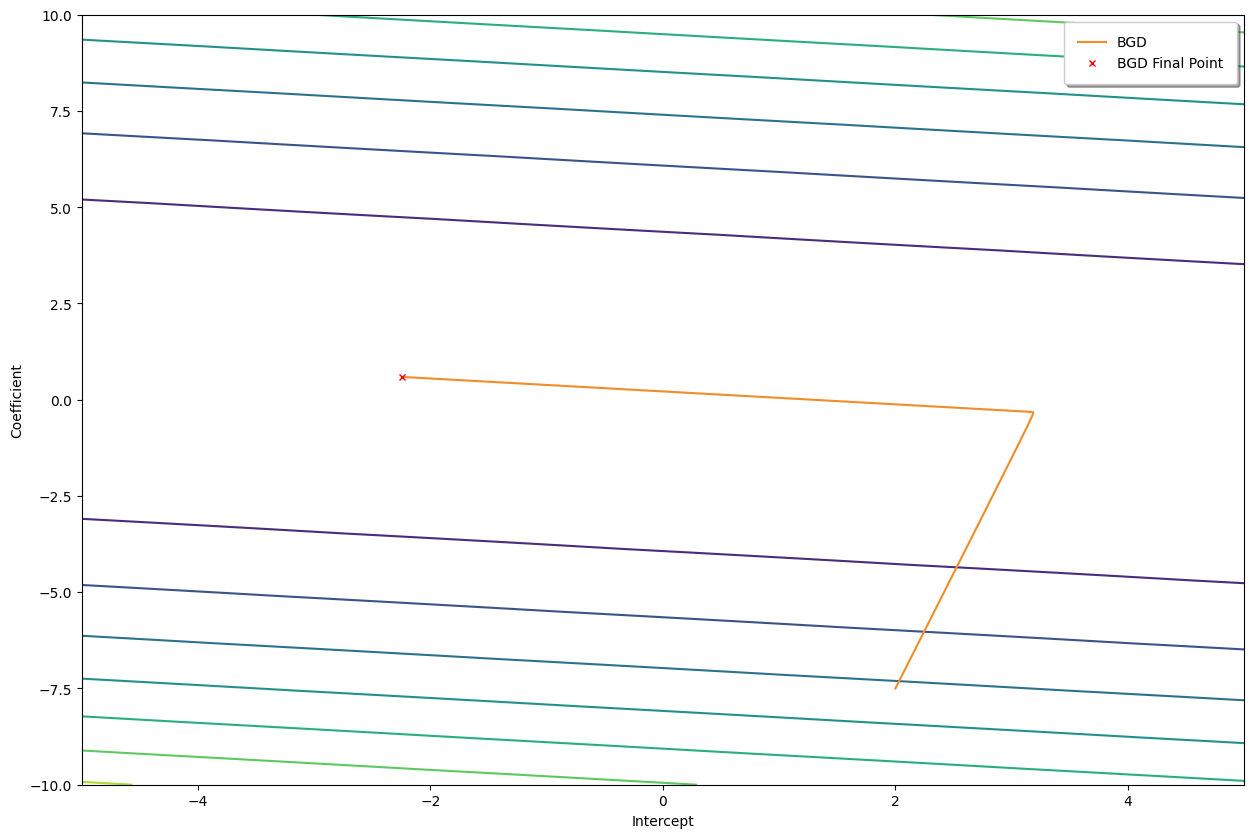

From the graph above, we can see that how the regression line changes from the time to time. After iterations, the MSE value of our own Gradient Descent is which is quite close to our baseline, .

Here are some keypoints for Batch Gradient Descent:

def bgd(x, y, epochs, df, alpha = 0.01): intercept, coefficient = 2.0, -7.5 length = len(x)

predictions = predict(intercept, coefficient, x) error = predictions - y mse = np.sum(error ** 2) / (2 * length) df.loc[0] = [intercept, coefficient, mse]

for epoch in range(1, epochs): predictions = predict(intercept, coefficient, x) error = predictions - y intercept_gradient = np.sum(error) / length coefficient_gradient = np.sum(error * x) / length intercept = intercept - alpha * intercept_gradient coefficient = coefficient - alpha * coefficient_gradient mse = np.sum(error ** 2) / (2 * length) df.loc[epoch] = [intercept, coefficient, mse] return df